1. Introduction: The Imperative of AI Integration

The rapid emergence of generative artificial intelligence (GenAI) demands a fundamental shift in instructional design across higher education, particularly within business and technology curricula (Mishra, n.d.; MDPI, 2024; Frontiers in Education, 2025). To prepare students for a workforce increasingly augmented by AI, educational institutions must develop evidence-based models for ethical and effective GenAI integration (AACSB, 2025).

This report serves as a pedagogical blueprint, detailing the successful design, implementation, and rigorous evaluation of a first-year undergraduate course component, “Excel Assessment 1: Microsoft Copilot (Gen AI) Integration.” This intervention was specifically designed to strengthen foundational Excel skills while simultaneously cultivating GenAI-based problem-solving and digital literacy, offering a transferable model for future educators navigating the complexities of AI adoption. The instructional approach strategically leveraged Microsoft Copilot as an AI assistant (Microsoft, n.d.), positioning it as a tool for accelerated productivity, data preparation, and insight generation (Microsoft, n.d.; ScholarWorks, 2021).

2. Theoretical Grounding: The AI-TPACK Framework

The design of this course component is grounded in the evolution of the Technological Pedagogical Content Knowledge (TPACK) framework, which serves as a critical lens for harmonizing technology with pedagogy and content (Mishra, n.d.; Frontiers in Education, 2025). Specifically, the project aligns with the emerging AI-TPACK model, which emphasizes the cognitive aspects of AI education, or “AI literacy,” within sustainable teaching practices (MDPI, 2024).

The course design successfully balanced the three core knowledge areas:

- Content Knowledge (CK): Deep knowledge of Excel functions, data cleaning, and business analytics principles (Provided Data).

- Technological Knowledge (TK): Specific use of Microsoft Copilot to explain functions and automate routine work (Provided Data).

- Pedagogical Knowledge (PK): Use of instructor-filmed video tutorials and applied exercises to guide students (Provided Data).

By ensuring a balanced integration of these elements, the course aimed to enrich traditional instruction and reinforce critical thinking, thereby avoiding over-reliance on GenAI (Frontiers in Education, 2025).

3. Intervention Design: The AI-Augmented Excel Course

The “Excel Assessment 1” was structured as a high-impact, applied learning exercise, bridging classroom concepts with real-world technological applications (AACSB, 2025).

3.1. Assignment Structure and Learning Objectives

The assessment was composed of 10 applied Excel tasks containing 50 total questions, designed to reinforce core data literacy and analytics skills. The tasks required students to execute a full analytical workflow, ranging from basic formatting to complex interpretation:

- Format data appropriately (currency, percentage, etc.).

- Calculate Total Costs, Profit, and Profit Margin using formulas.

- Apply conditional formatting to highlight Top 5 Stores and identify At-Risk margins.

- Sort and filter entire datasets while maintaining data integrity.

- Build PivotTables and PivotCharts to analyze Sales Revenue, Profit Margin, and Regional Trends.

- Interpret and present analytical insights in a clear, business-ready format.

- Use Microsoft Copilot to explain functions and accelerate routine Excel work

3.2. Instructional Strategies and Scaffolding

The primary pedagogical strategy involved using one hour of instructor-filmed video tutorials that guided students step-by-step through each process. This aligns with best practices for instructional video design, which recommend using conversational language, keeping videos brief and segmented, and embedding videos within an active learning context (Mayer & Fiorella, 2014; Cornell University, n.d.).

Crucially, the incorporation of Microsoft Copilot served as a form of AI-based interactive scaffolding. By instructing students to “Use Microsoft Copilot to explain functions and accelerate routine Excel work”, the tool enabled learners to solve problems that might have otherwise been unattainable without assistance. Copilot accelerates routine tasks like data cleaning and generating complex formulas (Microsoft, n.d.), effectively offloading low-level cognitive tasks to the AI (IBM, n.d.) and freeing up the learner to focus on higher-level problem solving, creative direction, and strategic analysis (ScholarWorks, 2021; IBM, n.d.). The course positions technology to extend the limits of human cognition without simply replacing it (National Institutes of Health, n.d.).

4. Results and Data-Driven Evaluation

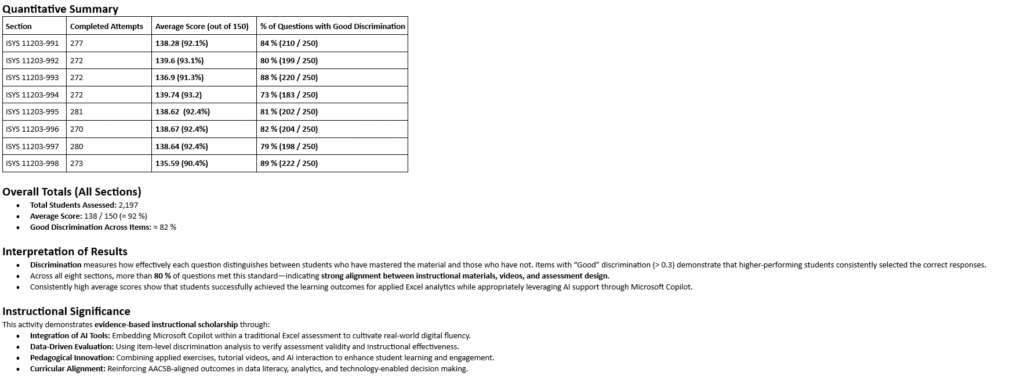

The assessment was rigorously evaluated using item-level discrimination analysis to verify its validity and instructional effectiveness. The results below represent the aggregated performance across 8 total sections of the course.

| Section | Completed Attempts | Average Score (out of 150) | % of Questions with Good Discrimination |

| ISYS 11203-991 | 277 | 138.28 (92.1%) | 84% (210/250) |

| ISYS 11203-992 | 272 | 139.6 (93.1%) | 80% (199/250) |

| ISYS 11203-993 | 272 | 136.9 (91.3%) | 88% (220/250) |

| ISYS 11203-994 | 272 | 139.74 (93.2%) | 73% (183/250) |

| ISYS 11203-995 | 281 | 138.62 (92.4%) | 81% (202/250) |

| ISYS 11203-996 | 270 | 138.67 (92.4%) | 82% (204/250) |

| ISYS 11203-997 | 280 | 138.64 (92.4%) | 79% (198/250) |

| ISYS 11203-998 | 273 | 135.59 (90.4%) | 89% (222/250) |

| Overall Totals (All Sections) | Total Students Assessed: 2,197 | Average Score: 138/150 (≈ 92%) | Good Discrimination Across Items: ≈ 82% |

4.1. Interpretation of Results

- High Achievement (Average Score): The consistently high average score across all 8 sections (≈ 92%) demonstrates that students successfully achieved the learning outcomes for applied Excel analytics while appropriately leveraging AI support through Microsoft Copilot. While high scores in AI-supported assessments require careful interpretation, the findings indicate that students generally found the tool helpful in supporting their learning and understanding of the related mathematics and concepts (Center for Engaged Learning, n.d.).

- Assessment Validity (Item Discrimination): The assessment was highly effective at measuring mastery. Discrimination measures how effectively each question distinguishes between students who have mastered the material and those who have not (University of Arizona, n.d.). A discrimination index above 0.3 is considered “good” and optimal for assessment integrity (University of Arizona, n.d.; University of Washington, n.d.). With more than 80 % of questions meeting this standard, the analysis confirms strong alignment between the instructional materials, video tutorials, and the assessment design

5. Pedagogical Implications for Future Educators

The successful outcome of this intervention generates three core design principles for educators seeking to integrate GenAI effectively:

5.1. Principle 1: Position AI as Scaffolding, Not a Substitute

The course design successfully integrated AI as a tool for interactive and adaptive learning (Truth for Teachers, n.d.). Rather than banning the technology, the course explicitly required students to use Copilot to “explain functions” and “accelerate routine Excel work”. This aligns with the concept of prompt engineering as a core skill, teaching students to “craft precise, thoughtful inputs for AI tools to produce effective outputs” (eSchool News, 2025; Coursera, n.d.).

This scaffolding approach helps mitigate the risk of cognitive offloading, where students delegate their thinking to the AI (Evidence-Based Education, n.d.). By requiring students to interpret the analytical insights derived from the data, the assessment prevented them from fully offloading the higher-order tasks, ensuring the human element remained focused on creative direction and strategic analysis (IBM, n.d.; ScholarWorks, 2021).

5.2. Principle 2: Align the Curriculum with Future of Work Standards

The project provides evidence-based instructional scholarship by reinforcing AACSB-aligned outcomes in data literacy, analytics, and technology-enabled decision making. By requiring hands-on interaction with Copilot in real-world scenarios, the course helps students develop the essential analytical and evaluative skills needed for a digitally driven business environment (AACSB, 2025; Deloitte, n.d.). Educators must follow this model by preparing students to view AI as a tool for augmenting, rather than replacing, human expertise, thus future-proofing the workforce (IBM, n.d.; ScholarWorks, 2021).

5.3. Principle 3: Manage Academic Integrity Through Transparency

The course component successfully managed academic integrity challenges by overtly embedding the use of Microsoft Copilot into the assignment structure. Since tools like Copilot can blend AI- and human-generated content (Trust, n.d.), the biggest challenge for educators is determining where student thinking ends and AI contribution begins (Trust, n.d.).

By explicitly permitting and mandating AI use, the educators established clear parameters for the students (Ahuna & Grady, n.d.; CSUSM, n.d.). This transparency is critical, as academic integrity policies vary widely by course and assignment (Ahuna & Grady, n.d.; CSUSM, n.d.). The results indicate that this pedagogical innovation—combining applied exercises, tutorial videos, and required AI interaction—can successfully enhance student learning and engagement while maintaining the validity of the assessment

References

AACSB (2025). Linking class material to jobs with generative AI.

Ahuna, K., & Grady, M. (n.d.). Academic integrity in the age of generative AI (Episode 10). UB Center for the Advancement of Teaching.

Center for Engaged Learning (n.d.). Analyzing an artificial intelligence-supported assessment and student feedback.

Cornell University Center for Teaching Innovation (n.d.). Effective videos.

Coursera (n.d.). Generative AI tools: Prompt engineering.

CSUSM Library and Technology Services (n.d.). Artificial intelligence and academic integrity.

Deloitte (n.d.). Generative AI and the future of work.

eSchool News (2025). Prompt engineering: A critical new skillset for 21st century teachers.

Evidence-Based Education (n.d.). Cognitive offloading: What is it and why is it important?

Frontiers in Education (2025). Navigating the complexities of GenAI integration in education.

IBM (n.d.). AI and the future of work.

Mayer, R. E., & Fiorella, L. (2014). Principles for reducing learning from instructional video. In V. S. L. A. J. S. P. (Ed.), Cambridge Handbooks for Teachers and Teaching.

MDPI (2024). Investigating the relationships within the AI-TPACK framework and its constituent knowledge elements.

Microsoft (n.d.). AI in Excel.

Microsoft (n.d.). Microsoft Copilot resources for education.

Mishra, P. (n.d.). Researchers apply TPACK framework to AI learning. Arizona State University.

National Institutes of Health (n.d.). The long-term effects of cognitive offloading in learning and memory.

ScholarWorks (2021). Pedagogical models for generative AI in higher education. CSUSB.

Tandfonline (2025). AI-based interactive scaffolding in learning: A literature review.

Truth for Teachers (n.d.). AI for scaffolds, supports, and differentiated tasks.

Trust, T. (n.d.). Tool temptation: AI’s impact on academic integrity. UMass Amherst.

University of Arizona College of Medicine (n.d.). Item analysis.

University of Washington (n.d.). Item analysis report.

Leave a Reply