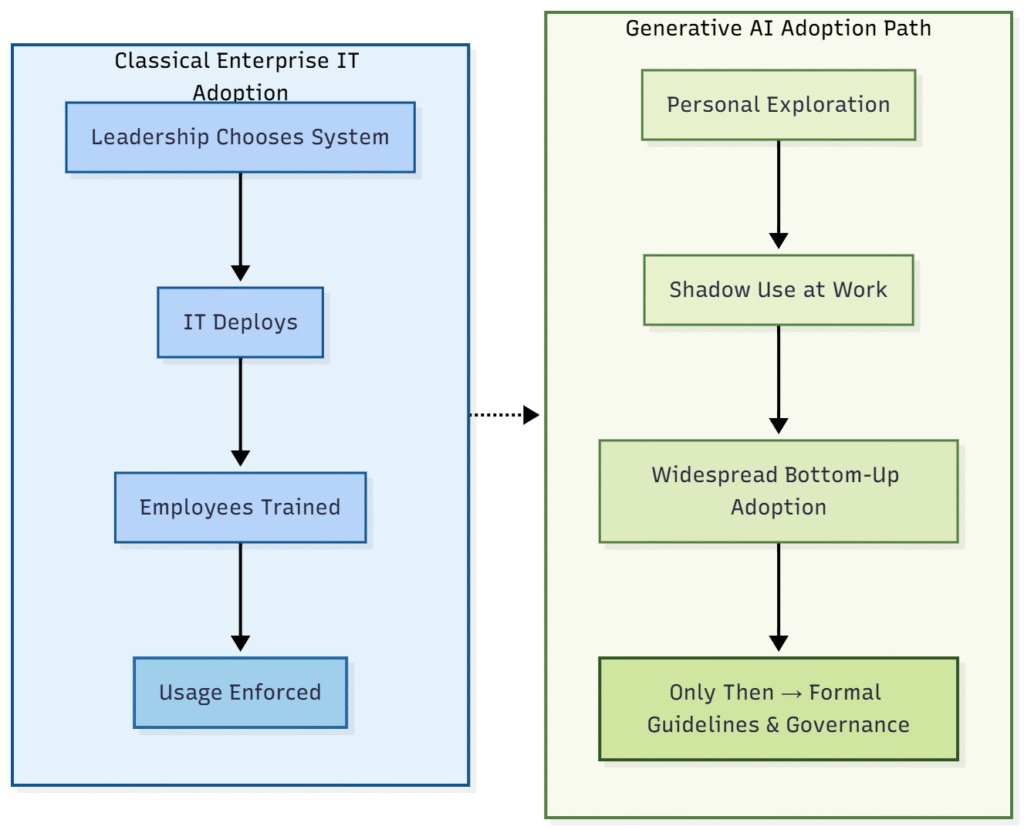

There’s a familiar pattern we see across industries right now. A leadership team announces a new AI initiative. A policy memo goes out. Training modules are uploaded. Teams are encouraged sometimes strongly to “embrace AI in their workflow.”

And then… very little changes.

Sure, a handful of enthusiastic early adopters start experimenting. Some teams build prototype use cases. A few prompt-sharing channels appear in Slack or Teams. But the majority of knowledge workers remain cautiously distant. AI stays at the edge of real work rather than embedded in the daily operational core.

Most organizations respond with the predictable next step: more training, more governance frameworks, more executive messaging.

But through our research, we’ve come to a different conclusion.

The problem isn’t awareness. It isn’t even access.

The real challenge is that most leaders still think about AI adoption as a single decision, when in reality, it’s a sequence of behavioral events that unfold over time.

The Hidden Layer Shaping AI Success: Mid-Career Professionals

When people talk about AI adoption, they usually focus on two groups:

- Executives, who design strategy and issue governance directives.

- Early-career employees, who experiment with new tools enthusiastically and adapt fast.

But in practice, neither of these groups determines whether AI becomes normalized in daily workflows.

The real power sits in a different layer—mid-career professionals. These are the operational gatekeepers: project leads, managers, senior analysts, medical coordinators, principal engineers, regional officers. They are senior enough to define “how work gets done here,” but embedded enough to influence the practices of teams beneath them.

They carry something the AI strategy decks rarely consider: workflow authority paired with professional identity.

Executives — Strategy, Governance, Mandates

⬇

Mid-Career Professionals (Operational Gatekeepers) — Translate AI into Routine Work

⬇

Early-Career Employees — Follow Local Workflow Norms

If AI doesn’t pass through the mid-career layer, it never becomes a routine.

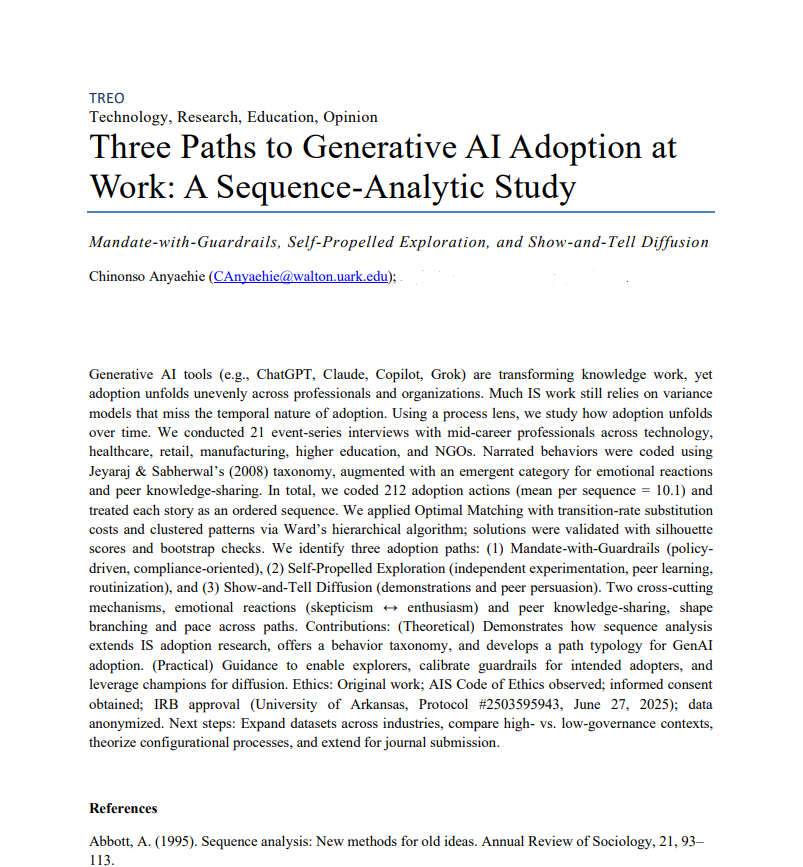

What We Did Differently

Rather than surveying attitudes or intentions, we conducted event-sequence interviews with 21 mid-career professionals across industries including technology, healthcare, retail, manufacturing, non-profits, and academia.

Instead of asking, “Do you use AI?” we asked,

“Tell us what happened the first time you encountered AI at work. Then what happened next?”

We captured 212 behavioral events, converted them into coded action sequences, and used sequence analysis—a method borrowed from bioinformatics—to trace exactly how adoption unfolded over time.

The Core Insight: Adoption Is Not a Switch—It’s a Choreography

When we mapped these sequences, a clear pattern emerged:

- Adoption didn’t happen when a mandate was issued.

- It didn’t happen when someone completed training.

- It happened when a specific sequence of events aligned:

- Emotion — curiosity, excitement, or skepticism sparked momentum (or stalled it).

- Peer knowledge-sharing — someone shared a prompt, a success story, or a workaround.

- Governance timing — policy supported momentum rather than suppressing it.

The order in which these elements appeared determined which adoption path the team followed.

This is the crucial shift: The impact wasn’t in the elements themselves, but in their sequence.

The Three Adoption Paths We Identified

Based on these sequences, three distinct adoption trajectories emerged:

1. Mandate-with-Guardrails

- A light mandate signals legitimacy.

- Training follows quickly.

- Peer coalitions emerge organically.

- Prompt-sharing or templates circulate, accelerating full adoption.

Leadership takeaway: Policy works best when paired with social amplification and visible peer proof, not as a standalone directive.

2. Self-Propelled Exploration

- Individuals begin experimenting on their own—driven by curiosity, not instruction.

- The organization later provides access or small nudges, not heavy policy.

- Peer success stories trigger replication.

Leadership takeaway: Not every team needs a mandate—some need freedom first, policy second.

3. Show-and-Tell Diffusion (Skeptic Conversion Path)

- Early emotion is skepticism, caution, or distrust.

- Adoption only begins after seeing a peer succeed live (not in an abstract case study).

- Mandates sometimes soften to enable voluntary re-engagement.

Leadership takeaway: Skeptics do not convert through pressure—they convert through credible demonstration and coaching.

Strategic Implication: Stop Designing Policies. Start Designing Sequences.

Most AI adoption playbooks focus on governance, training, and tooling. These are necessary but insufficient. What our data shows is:

The real design task is sequencing.

A mandate before curiosity? → Resistance.

Training after peer success stories? → Acceleration.

Peer knowledge-sharing after training? → Coalition momentum.

Peer knowledge-sharing without any governance clarity? → Innovation silos and quiet anxiety.

The same elements—training, mandate, peer influence—produce very different outcomes depending on their position in the sequence.

This is why many AI rollouts feel stalled: the stages are there, but the order is wrong.

So, What Should Leaders Do?

Instead of asking:

“How do we get people to adopt AI?”

Ask:

“Where are our teams in their adoption sequence, and what is the next right action to move them forward?”

That shift—from adoption as compliance to adoption as sequence design—is where AI transformation becomes real.

✅ Call to Action

If you’re leading an AI initiative right now, don’t start with a policy playbook. Start by mapping your organizational sequence hotspots:

- Where is curiosity already active?

- Where is peer knowledge-sharing already happening?

- Where are skeptics waiting for proof, not policy?

- Where are mandates being issued too early or too late?

AI transformation isn’t about announcing a future state. It’s about engineering better sequence flows in the present.

Leave a Reply